It has been almost 2 years since we released Nx Cloud 2.0. Since then, it has saved over 400 years of computation by leveraging its distributed caching and task execution. And we keep adding 8 years every single week. Not only does this tremendously impact our environment, but it also helps developers be more productive and companies save money.

In the last couple of months we have quadrupled the team and have done some amazing things. And we have some big plans for what is coming next. Here’s all you need to know!

Table of Contents

- New, Streamlined UI

- Prefetching and Faster Cache Uploading

- DTE Just got Better

- Direct Integration With GitHub, GitLab and Bitbucket

- Enterprise Support

- New, Simplified Plans and Pricing Model

- Coming Next

- Learn more

Prefer a Video? We’ve got you Covered!

New, Streamlined UI

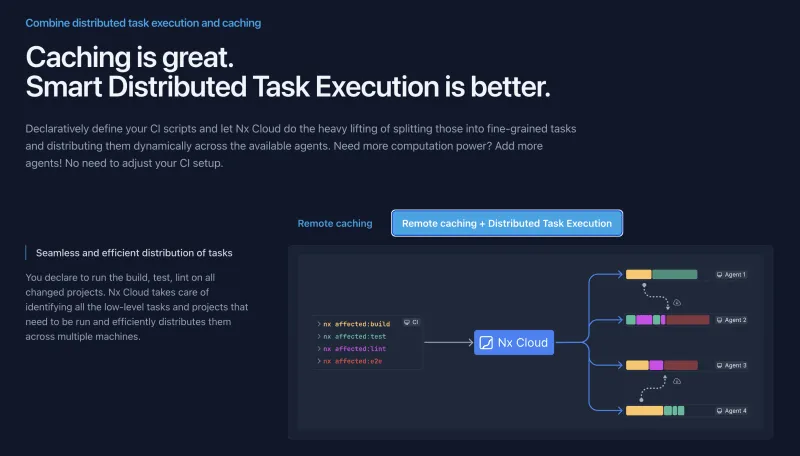

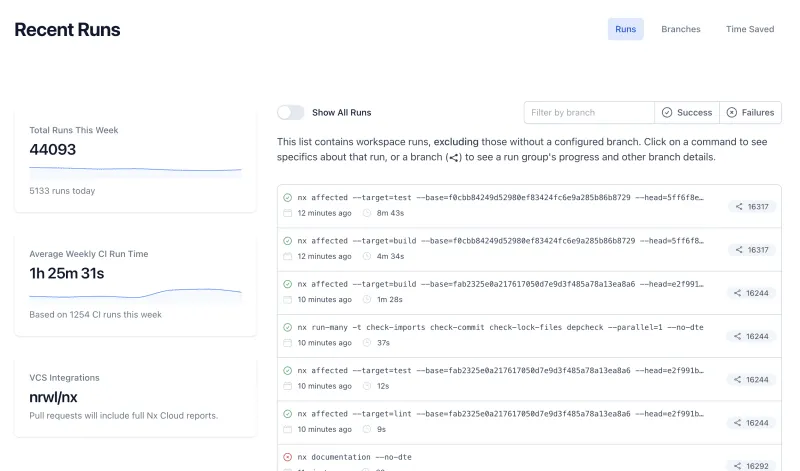

This latest release of Nx Cloud comes with a new, streamlined design that offers users a more modern and visually appealing experience. This includes the main Nx Cloud website, where we improved our messaging, including interactive visualizations to better explain some core concepts around remote caching and distributed task execution.

The Nx Cloud application — showing your runs, cache saved, and stats — also got a significant overhaul, making the UI more lightweight and easier to parse.

More UI-related updates and improvements are already underway.

Prefetching and Faster Cache Uploading

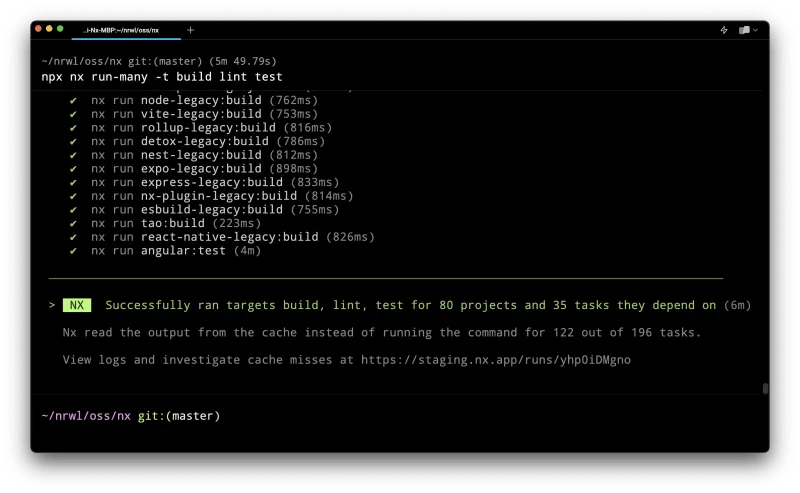

At Nx, we are performance addicts! Especially when it comes to local development, every millisecond counts! In the latest update of the Nx CLI, we added the ability to offload some of the remote cache management to the Nx Daemon. As a result you no longer have to wait for the cache to upload. This saves valuable time, allowing the command to complete instantly and immediately providing you with the necessary link. We also prefetch cache results in the background to have them ready when needed.

Both optimizations can save seconds.

DTE Just got Better

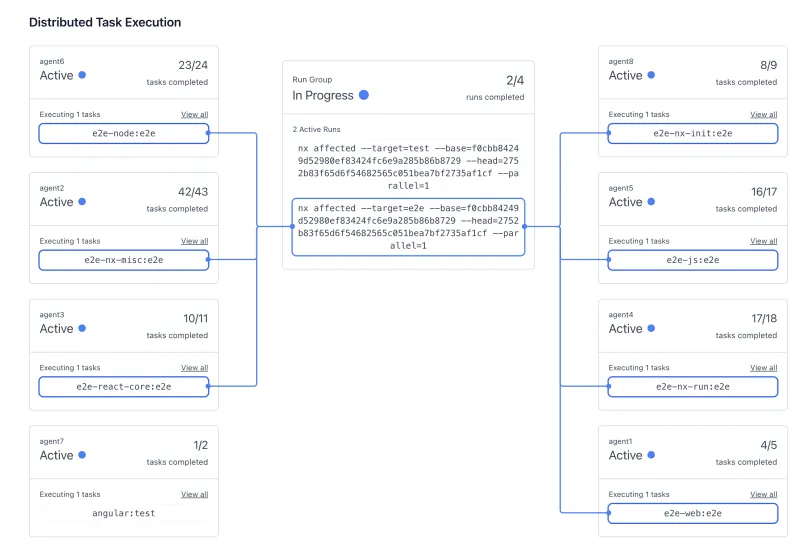

Distributed Task Execution (short DTE) is a core part of what makes Nx Cloud stand out compared to other solutions. And we made some significant improvements to both the ergonomics and speed.

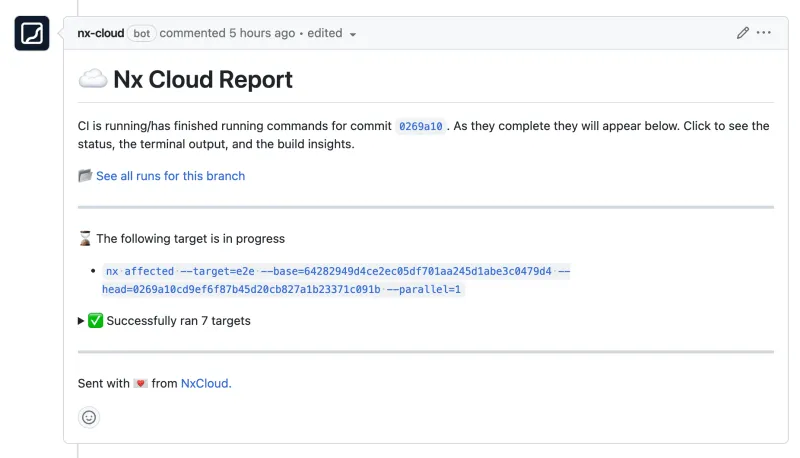

Identify failed tasks early — You can now view information about the in-progress DTEs, allowing you to quickly identify and address any failed functions without waiting for the command to complete.

Simplified CI setup — We simplified the setup process for most CI systems, eliminating the need to pass environment variables manually. Instead, everything is automatically derived from the context.

Improved performance and efficiency — Nx Cloud now intelligently figures out which tasks will be cache hits before sending them to agents. Rather it can directly send them to the main job, reducing unnecessary round trips and drastically speeding up CI runs.

Efficient agent management — We have introduced a new command, npx nx-cloud start-ci-run –stop-agents-after=e2e, that allows you to notify Nx Cloud when specific commands, such as long-running e2e tasks, have started. This helps Nx Cloud proactively identify and shut down agents that will not be needed, improving compute efficiency.

Reduce fix costs — Even though cache hits are basically free, spinning processes still have fixed costs. We fixed that (see what I did there) by leveraging a new Nx feature that allows to have a long-running process on an agent to which we can arbitrarily feed new tasks. This removed the DTE overheads and also improved the predictability of runs.

Direct Integration With GitHub, GitLab and Bitbucket

Our GitHub integration has been enhanced. Now, the list of runs is updated in real-time as soon as they are created or their status changes, providing developers with up-to-date information directly on GitHub.

In addition to the GitHub, we expanded our Nx Cloud live status updates to work on GitLab and BitBucket.

Enterprise Support

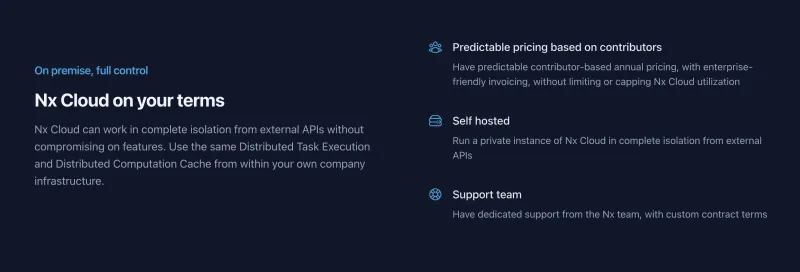

We have extensive experience working with Fortune 500 companies, helping them scale their development using monorepos. This has given us valuable insight into the unique security requirements of these companies. Our Enterprise plan reflects that allowing organizations to have a fully self-contained version of Nx Cloud that can be hosted on their own servers and comes with dedicated support from the Nx and Nx Cloud core team.

We’ve recently made a couple of improvements to our enterprise offering.

- Helm Charts — We added a Helm chart to simplify the process of deploying Nx Cloud to on-premises infrastructure, allowing organizations to quickly set up and manage their own instance of Nx Cloud within their secure environment.

- Stability improvements — We significantly reworked our on-premises solution to be identical to our SaaS deployment. This revamp resulted in a more robust and reliable on-premises deployment of Nx Cloud, ensuring enterprise-grade performance and reliability.

- SSO — We now support AWS Identity and Access Management (IAM) for seamless integration with existing AWS environments and the SAML protocol for a more flexible single sign-on integration across various providers. This enables organizations to leverage their existing identity management systems for authentication and authorization.

Learn more at enterprise.

New, Simplified Plans and Pricing Model

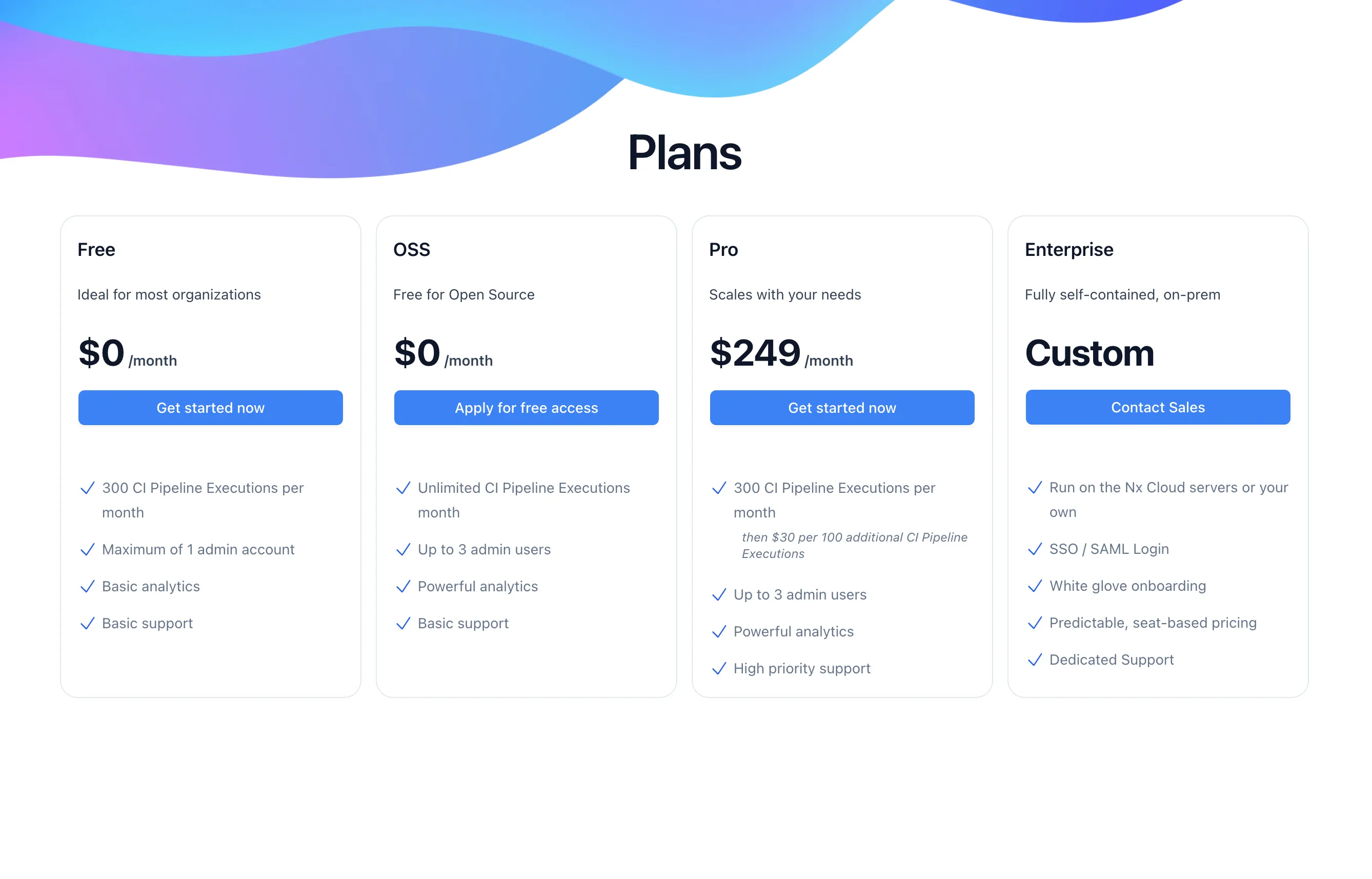

Nx Cloud has evolved a lot since we first released it in 2020, and is changing even more in 2023. To better adapt to Nx Cloud being a critical CI tool, we changed our pricing model to be more consistent and predictable for CI workloads.

Nx Cloud’s previous pricing was based on time savings from Nx Cloud, which made sense when Nx Cloud was strictly a distributed caching service. The new pricing model is based entirely on the number of CI pipeline executions per calendar month. We believe this is a simpler and more transparent model that should help you predict your costs far more easily.

Our Free plan allows one administrator and up to 300 CI pipeline executions per month. Our Pro plan allows more administrators, and uncapped CI pipeline executions, with a flat fee and incremental charges per 100 CI pipeline executions. The OSS plan comes with unlimited CI pipeline executions. Nx has been open source from the beginning and we care a lot about that ecosystem. So this is our contribution to help OSS projects and make their CI pipeline executions faster.

Finally, the Enterprise plan is for companies that want full control over where their data is hosted, get hands-on, dedicated support from the Nx & Nx Cloud team, and enterprise features such as SSO & SAML-based authentication support.

All these changes should allow developers to choose the plan that best suits their needs and budget more easily, ensuring a seamless and transparent experience regarding pricing and subscription management.

Learn more at https://nx.dev/pricing.

Coming Next

We’ve got some big plans for Nx Cloud. You really want to write your CI script by focusing on what you want to achieve rather than thinking about making it fast. We’re going to make this happen!

The current Distributed Task Execution (DTE) already goes a long way, but you still have to provision the agents by yourself. Providing the correct number of agents is crucial for maximizing efficiency and reducing idle time. And it is not even a static number but might depend on the actual run itself. Nx has extensive knowledge about the structure of the workspace and according tasks. We want to leverage that information. Imagine a setup where you have a roughly 20 line CI config for a repo with hundreds of developers and Nx Cloud automatically determines for each run the ideal number of agents required, provisions them, distributes all tasks efficiently and then disposes all agents again. All fully automatically. And it will be fast. To the point where you wouldn’t even need your Jenkins, CircleCI etc at all.

In addition, we are actively exploring ways to provide advanced analytics for your workspace, including insights into the frequency and duration of specific tasks. This valuable information can help identify large tasks that could benefit from being broken down into smaller ones, leveraging caching and other speed improvements to optimize performance. Stay tuned for more to come!